This is a short summary of Data Driven NYC’s interview to the co-founder and CEO of Guardrails AI Shreya Rajpal.

Guardrails AI offers:

Guardrails AI is a fully open source library that assures interactions with Large Language Models (LLMs). It offers

- Framework for creating custom validators.

- Orchestration of prompting → verification → re-prompting.

- Library of commonly used validators for multiple use cases.

- Specification language for communicating requirements to LLM.

The company is being build right now:

- Run time guards.

- Validators: Independent check you do for any identified risk that you have registered (for instance: a given example of hallucination).

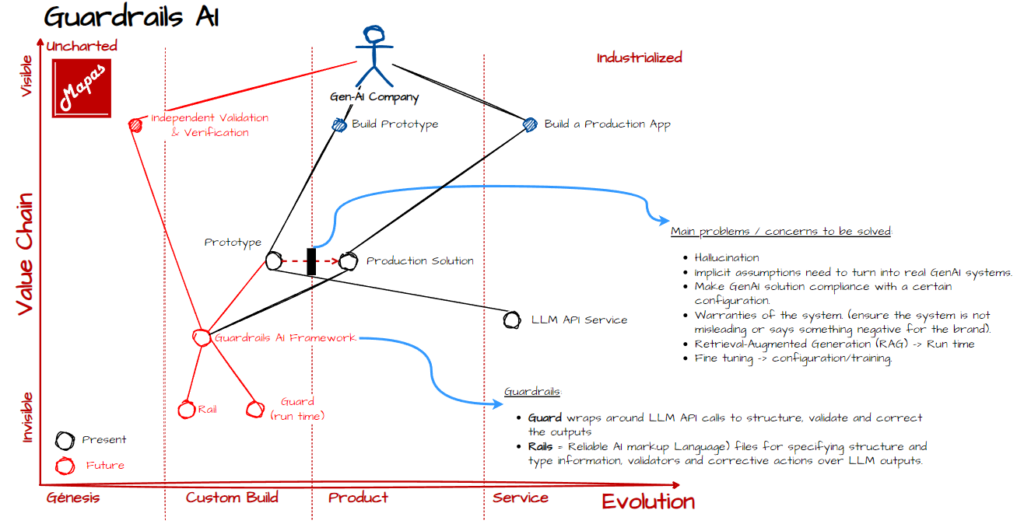

A Map

A Wardley Map to visually illustrate the problem Guardrails AI is trying to solve:

- Independent validation and verification is something that will be happening in the near future, not only for compliance reasons but for real time check that enable the Gen-AI solution to do not fall into mistakes or erosion of the brand.

- Guardrails AI is being build right now, so by that reason is in red in the map.

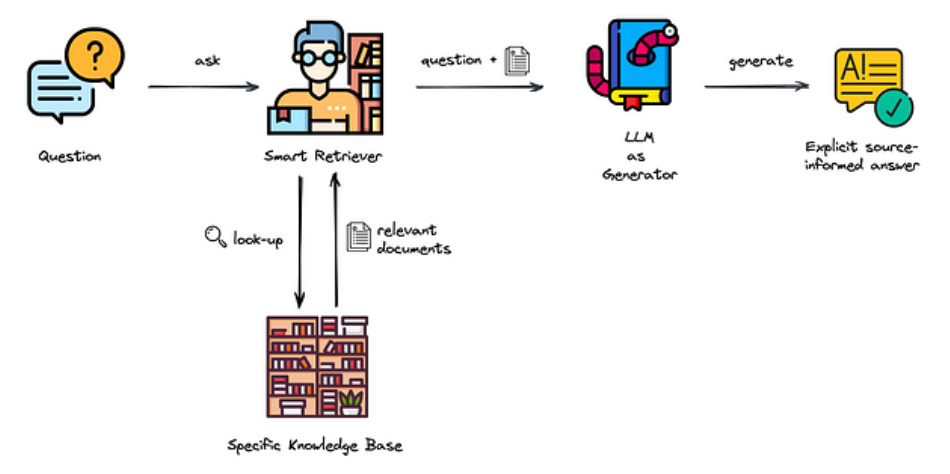

Retrieval-Augmented Generation (RAG)

It’s a technique that combines the abilities of a pre-trained language model with an external

knowledge source to enhance its performance, especially in providing up-to-date or very specific information.

A simple explanation:

- Retrieval: When you ask a question, the system first retrieves relevant information from a large database of text. It’s like looking up reference material to find the best possible answers.

- Augmented: The information retrieved is then combined with the knowledge already present in the language model. This enhances the model’s ability to generate a response.

- Generation: Finally, the system generates a response using both its pre-trained knowledge and the additional information it just retrieved.

The advantage of RAG is that it allows the model to provide more accurate and up-to-date responses than it could with just its pre-trained knowledge.

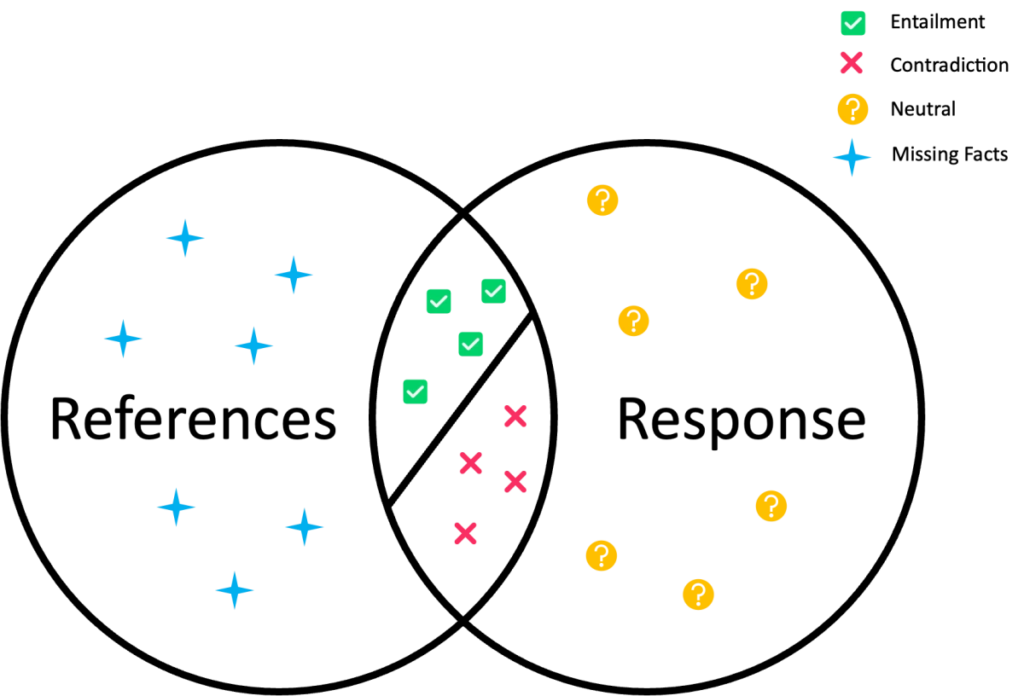

Update January 2024, Amazon RefChecker

Amazon has published this GitHub repo, with functionality to increase the quality of responses given by LLMs. The initial scope is more limited than Guardrails AI, where here the focus is the references check.

https://www.amazon.science/blog/new-tool-dataset-help-detect-hallucinations-in-large-language-models

As usual, any constructive feedback is welcome.