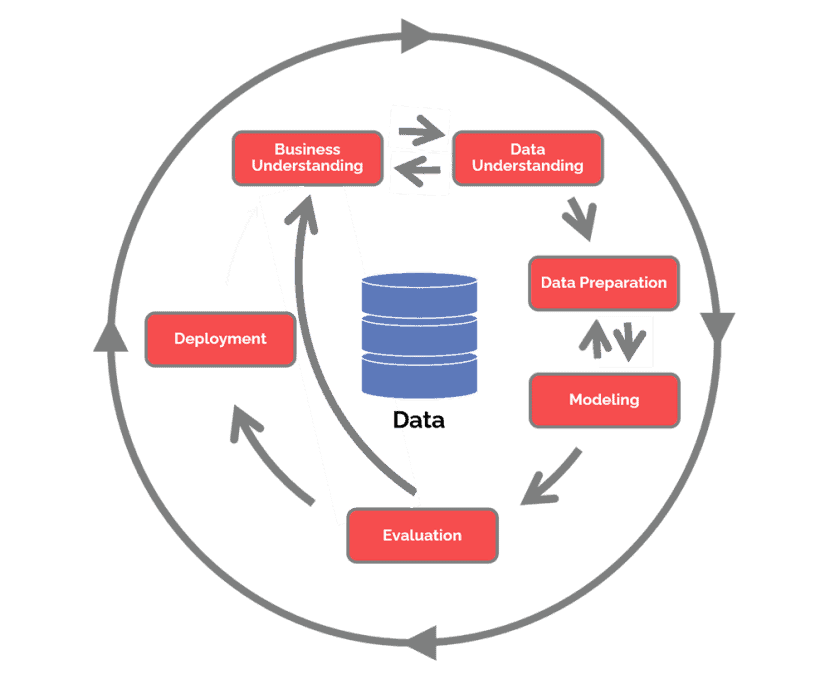

The cross-industry standard process for data mining or CRISP-DM is an open standard process framework model for data mining project planning, created in 1996.

The process of CRISP-DM is into 6 phases or components:

- Business understanding – What does the business need?

- Data understanding – What data do we have / need? Is it clean?

- Data preparation – How do we organize the data for modeling?

- Modeling – What modeling techniques should we apply?

- Evaluation – Which model best meets the business objectives?

- Deployment – How do stakeholders access the results?

1. Business understanding

Basic checklist:

- Understand business requirements

- Form a business question

- Turn the business question into ML question/s

- Define criteria for successful outcome of the project

- Highlight project’s critical features

- List assumptions

- List resources (specially data resources)

- List risks and potential mitigation actions

- Build a return of investment (ROI) calculation

2. Data understanding

Basic checklist:

- Data collection:

- Define steps to extract data

- Analyze data for detecting additional requirements

- Consider other data sources

- Data properties

- Describe data: amount, metadata, properties

- Find features and relationships in the data

- Quality

- Verifying attributes

- Identifying missing data

- Reveal inconsistencies

- List problems

3.- Data preparation

Basic checklist:

- Final dataset selection

- Analyze constraints: size, included/excluded columns, record selection, data types

- Final dataset preparation

- Steps: clean, transform, merge, format

- Make decisions about what to do with missing data. Take notes about these decisions.

- Make decisions about what to do with missing properties. Take notes about these decisions.

4.- Modelling

Basic checklist:

- Select a model, create it

- Create a testing plan for that model

- Split training and testing datasets

- model evaluation criterium

- Tune and test the different available parameters

- Tweak the model for better performance

- Describe the trained models and report findings

5.- Evaluation

Basic checklist:

- What is the accuracy of the model?

- Model generalization on unseen/unknown data

- Perform quality assurance checks

- Was any important criteria overlooked?

- What is the model performance using determined data?

- Is data available for future training?

- Does the model meets the success criteria? yes / no

6.- Deployment

Basic checklist:

- Planning deployment

- Understand infrastructure deployment requirements and processes

- Understand applications deployment requirements and processes

- Understand required environments to deploy (testing, QA, production)

- Determine how code is going to be managed between environments (probably done already)

- Maintenance and monitoring

- Enable the required monitoring tools, views and reports.

- Review data quality thresholds.

- Final report

- Document all processes used in the project

- Review all goals defined for the project and if they met/not-met them (and reasons)

- Detail findings of the project

- Explain the model used and the reason this model is selected as the right one.

- Explain the models tested but finally discarded, and its reasons

- Identify the customer groups using this model

- Project review

- Summarize the results and present to stakeholders